|

| My First Simulation! (blue = quiet red = loud) |

Maths is not my forte, and I have no idea how acoustics simulation works "under the hood". But I do know my fundamentals (I have my time working at d&b audiotechnik to thank for that). So I'm going to go from first principals and build a really simple interference visualisation tool. I have no idea if what I've done is correct but it feels about right.

Lets keep it simple and say that there can only be two sources, both sources are perfect point sources (they are infinitely small, have a flat frequency response from 0 to infinity, and they can go infinitely loud), and we are only going to work in 2D.

So what am I trying to do? Well I want to see how loud a specific frequency is at every point on a plane. That's too complicated, let's simplify. How about: I want to find out how loud a single frequency is at one point in space, given two sound sources.

That's probably simple enough. That's addition and subtraction of sound waves. That's electroacoustics 101 (if you haven't done this course you really should. It's free! Also read this book. It's not free!).

Time for some maths, yep we need some because we actually want a loudness number in the end!

Addition and Subtraction of Soundwaves

A sine wave:But because computers like to work in radians and humanbeans like to talk about degrees we just need to add a little bit to this equation so that x can be in degrees:y = sin(x)

y=sin((x*pi)/180)

Great there is one sine wave. But we want to add two together:

y=sin((x*pi)/180) + sin((x*pi)/180)

But our two sine waves are not going to arrive at our measurement point at the same time. There will be an offset in arrival time of the two sinewaves. We can see this as a phase difference between the two waves. So we actually need to add a phase difference to the formula above. I'm going to call the phase difference p:

y=sin((x*pi)/180) + sin(((x-p)*pi)/180)

Or if we take out our radian conversions to make it look nicer:

y=sin(x) + sin(x-p)

If you plot the above with p=90 for x=0:540 you get this image (the two signals are red and green, the sum is black):

I made that plot in R using this code:

p <- 90

x <- seq(0,540,1)

w1 <- sin((x*pi)/180)

w2 <- sin(((x-p)*pi)/180)

out <- w1 + w2

plot(x, out, type="l", ylim=c(-2,2))

lines(x, w1, col="green")

lines(x, w2, col="red")

x <- seq(0,540,1)

w1 <- sin((x*pi)/180)

w2 <- sin(((x-p)*pi)/180)

out <- w1 + w2

plot(x, out, type="l", ylim=c(-2,2))

lines(x, w1, col="green")

lines(x, w2, col="red")

But you could use libraCalc or excel too.

Calculating the phase difference

In the calculation above we just decided that p should be 90. But how do we work out what it actually is?Well first of all we need to work out the difference in distance between our measurement point and each of the sources. The easiest way to do this is to put everything on a grid and give everything coordinates.

So lets put source one at (0, -0.86) and source two at (0,0.86). Why? Well those numbers give us a distance of 1.72 between the two sources. 1.72 is half of 3.44. The speed of sound is roughly around 344m/s (it was a good value to choose when working to two d.p!) so we will be able to get predictable patterns for 50Hz, 100Hz, and 200Hz etc. It keeps the maths easy!

For now, let's put the mic at (10,0). We've got a small isosceles triangle with the two sources at one end and the measurement point at the tip. I've set it up like that so that we know that we should have no phase difference between the two. It means that we can check our maths really easily.

Now we need to find the distance between points on a graph (thanks google! Or just remember Pythagoras from highschool...). So then we've got two distances (d1 and d2). Now we just need the difference between these (d2-d1), I'm going to call this difference d (for the positions described above d should be 0).

We've got the difference in distance now how do you turn that into difference in phase angle? With this cute little formula where d is the difference in distance between one source and the measurement point and the other source and the measurement point:

Let's say we're calculating for 100Hz, using the wave equation:360d/λ = p

344/100 = 3.44. So (360*0)/3.44 = 0! Great, we can now calculate the phase angle! Check it with the measurement position of (0,10) you should get a nice predictable number!c/f=λ

Calculating the level

This is all very well but we've just been talking about distance and we've not taken into account that sources get quieter as you move away from them. So we need to work out what the level should be at the measurement point. This means updating the formula for adding sound waves together. All we need to do is add a level multiplier to each sine wave:Now we can plot with level differences:y=L1sin((x*pi)/180) + L2sin(((x-p)*pi)/180)

But we need to calculate what the level should actually be. Because we're using point sources we can use the inverse square law where sourceInitialLevel is the level of the source at 1m.

Level drop in dB = 20*(log10(abs(distance)));

Level at point = sourceInitialLevel - level drop;

Finding the final level at the measurement point

This is the bit that I got stuck on for ages. I was trying to differentiate the sum formula to find the maxima and it was getting really quite messy. Then I took a step back and decided - it's only 360 points (I think you only actually need to do it on 180), why don't I just rattle through them and manually find the maximum point?! So that's what I did.Now we come to a point that I need to think about a bit more before I can give a nice justification for why it's needed: I chose to convert my dBSPL into pressure when it came to adding together the two sinewaves. I calculated the level at the measurement point in dBSPL (using all the stuff above) but then converted that level in to raw Pascals. Once I had found the maximum level of the summed sine wave I converted the raw Pascals back in to dBSPL. I think that's the right way to get correct dBSPL results.

All done!

Putting it all together.

We have now got all of the tools needed to calculate the level at any point in space from two given omnidirectional sources. Now we need to display this data.First build a grid of measurement points, then iterate through the grid calculating the level at each point using the maths from above each time.

- Find the distance to each source from the measurement point.

- Find the difference between the two distances.

- Calculate the level of each source at the measurement point.

- Sum the two sources.

- Find the peak of the summed sine wave.

So you've got a grid of colours, all you have to do now is display them!

I did my version in processing because it's such a fast way to chuck a proof of concept together but you can probably use any language you like. If anyone would like to see my code, or fancy fixing my mistakes ask me and I'll make the BitBucket public (warning: the project has moved on quite a bit from here).

Below you can see two screenshots from my processing project. I created a grid with 0.25m spacing for the measurement points. You can see the sources as white cubes. Each measurement point is coloured depending on how loud it is: red is loud, then it goes through yellow, green and ends with blue for the quiet areas.

|

The mark in the middle of this image just shows the centre of the plane.

|

I wrote this post a good few days ago. The project can now do delay, polarity, and most importantly many sources. Here are some screenshots:

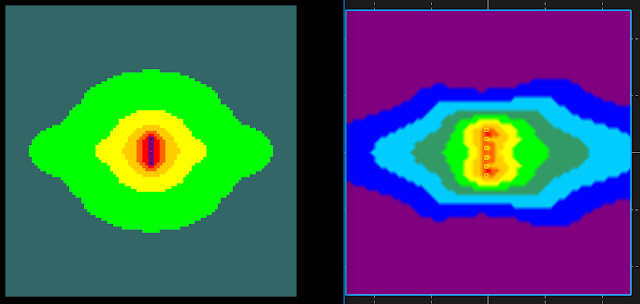

However, I think I have made a mistake. Because here is a screenshot with identical settings in ArrayCalc (from d&b audiotechnik) and my simulator. Sources are the same, resolution is the same, physics should be the same! But the pattern is totally different. I have a lime.

All feedback gratefully received etc etc.....

|

| End-fire cardioid simulation with 3dB per colour band. |

|

| Development mode showing actual level values at measurement points. |

However, I think I have made a mistake. Because here is a screenshot with identical settings in ArrayCalc (from d&b audiotechnik) and my simulator. Sources are the same, resolution is the same, physics should be the same! But the pattern is totally different. I have a lime.

|

| My Simulator vs ArrayCalc - I think I've made a mistake somewhere! |